Alessio Grancini is Senior AR Prototyper Engineer at Magic Leap and focuses on translating design into software applications for the next generation of AR wearable devices. His work starts in unity, but expands into many realms, from curating a design process to developing an interaction concept in detail until production.

Let’s get into it: AI is changing everything, how is it affecting your work?

Things are changing rapidly these days. AI is disrupting many domains with its valuable applications, but in general, technology just moves fast.

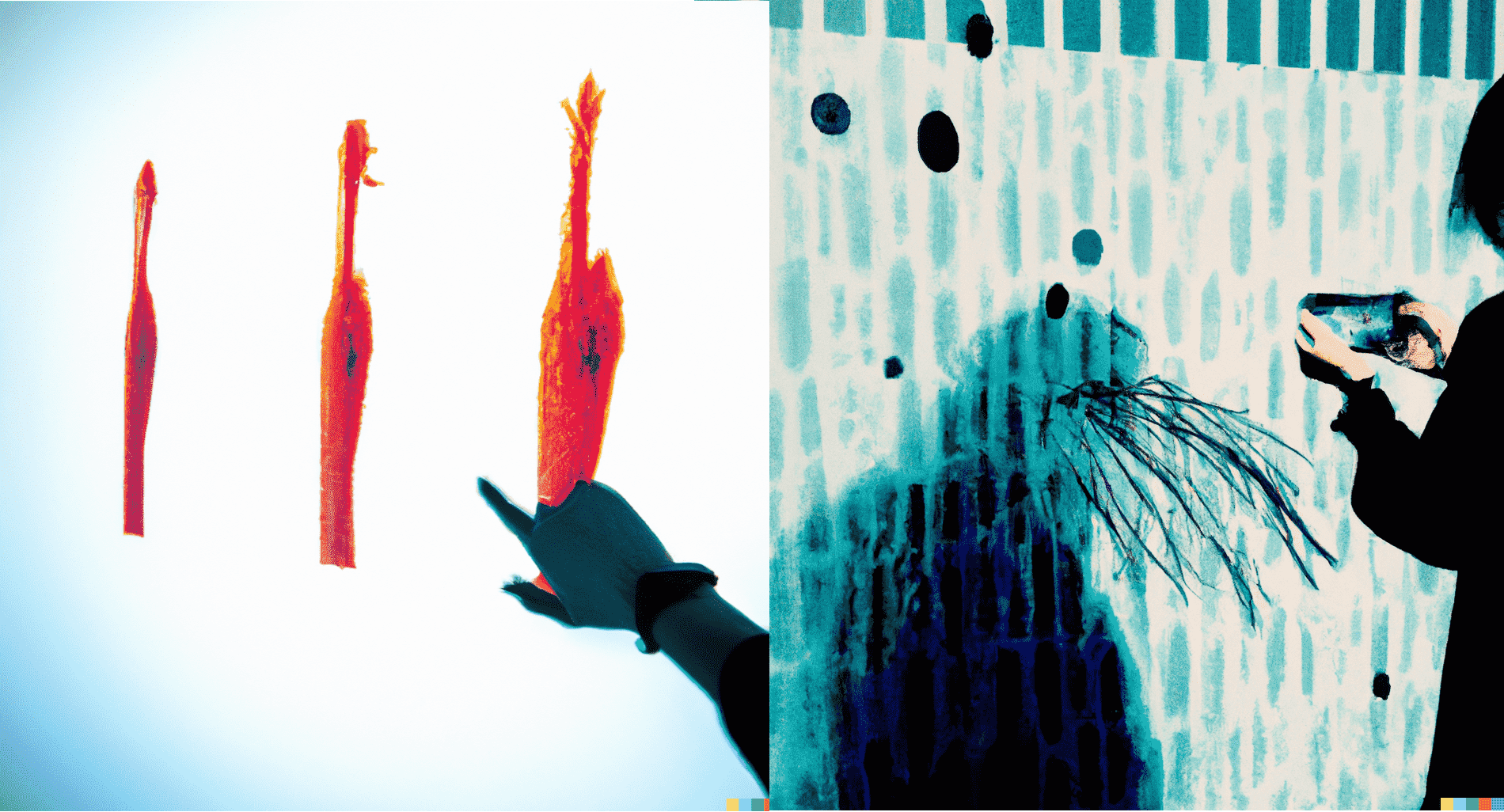

It’s easy to notice from every social media feed and online news a substantial increase in AI products where you can achieve incredible results with the smallest effort. This disruptive way to think about “doing things” also inspired the title of my talk for Design Matters Tokyo 23 “Everything Interface”. What I’m referring to using this title, is something along the lines of taking a photo of a box and turning it into a building through an inpainting process. In other words, extending our ideas and bringing them to life using the creativity we are all born with.

A common relationship I’ve extrapolated from demos like @bilawhalsidhu’s stable diffusion exploration or @karenxchang Video to Movie is that, somehow, we are defining a sort of “parallel traits”, “skeletons of reality”, references that turn into products. It reminds me a little of the extreme interpretation of the Evangelion’s instrumentality project, a popular anime where the flaws in every living being would be complemented by the strengths in others.

My background is in architecture, and from there, I recall how design takes inspiration from literally everything.

What does ‘Design Matters’ mean for your current goals?

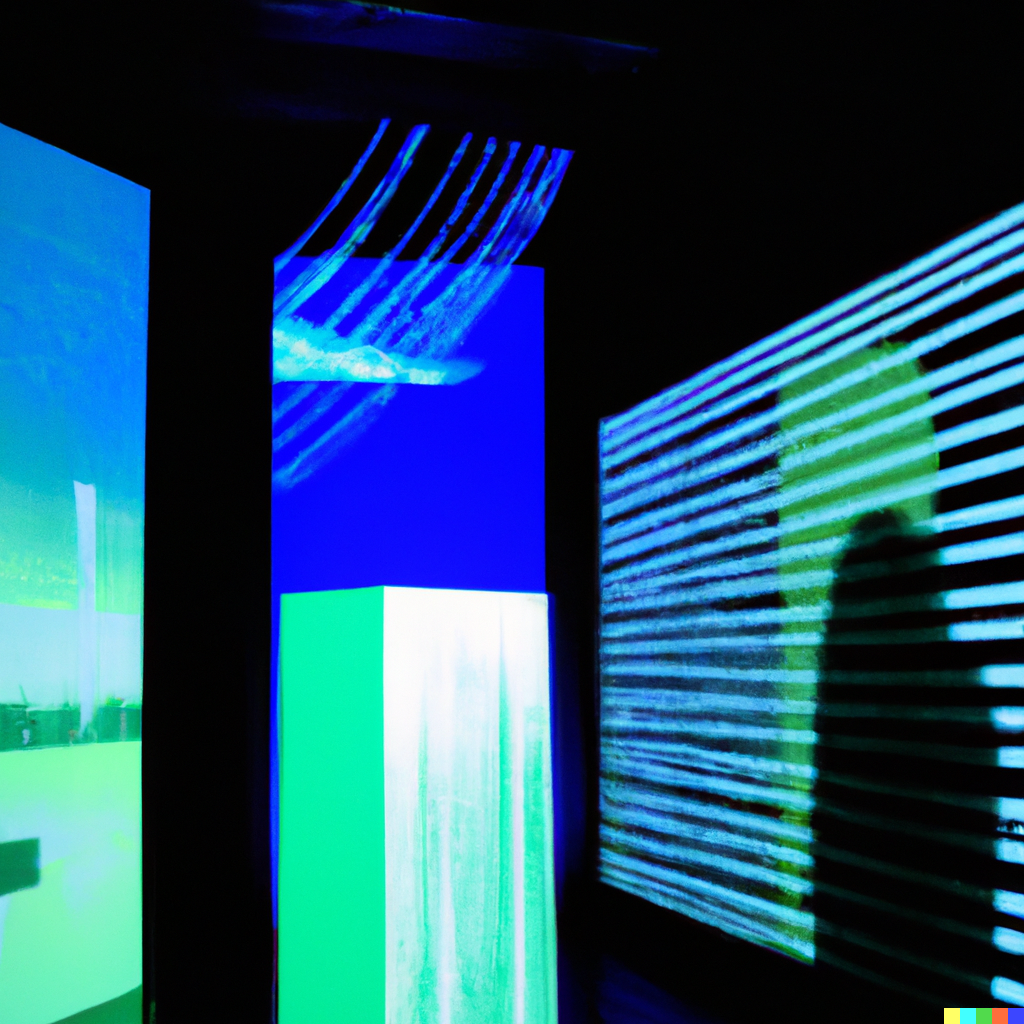

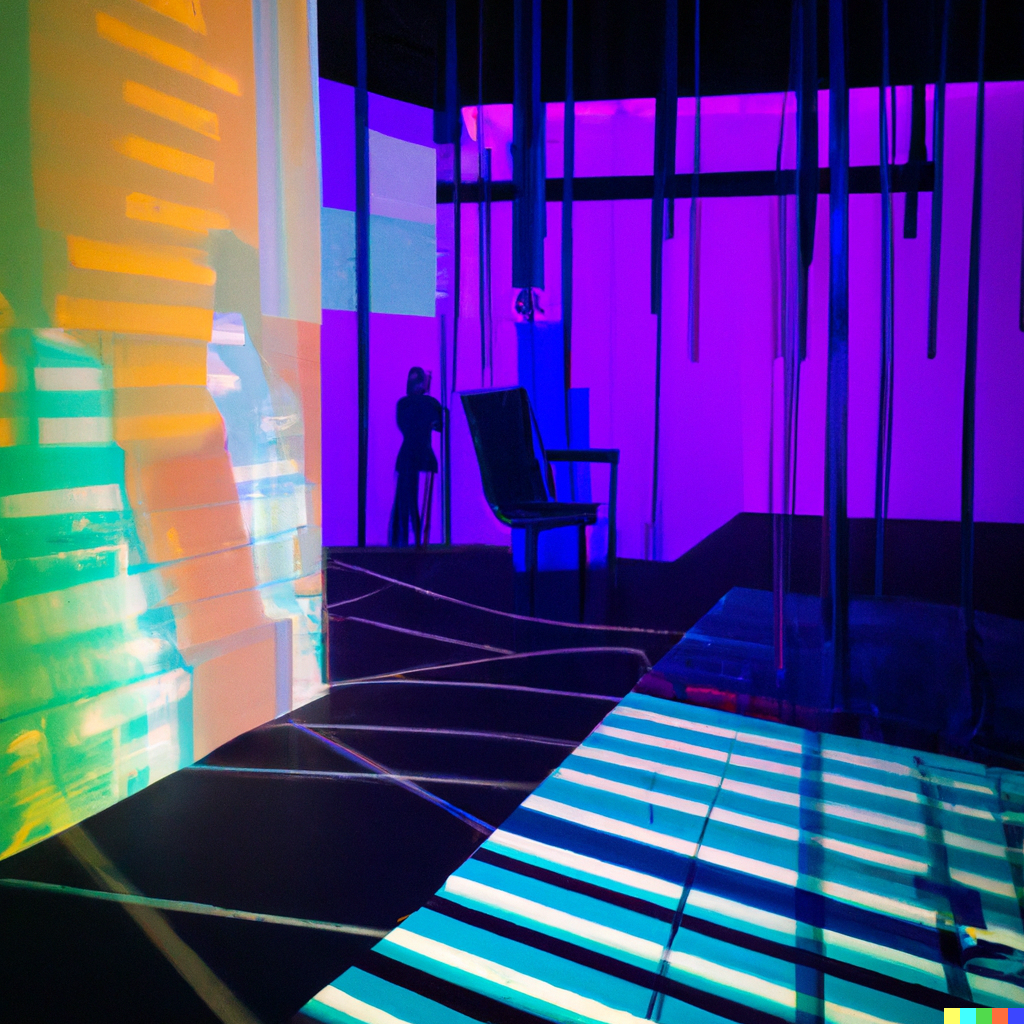

I have taken the challenge of building an app for the Design Matters Tokyo 23 conference. I’m a big fan of having a project-based approach to learning new things. I like to put myself in situations where a tough deadline pushes me to cover ground I have never covered. In the past, I’ve played around with some AI libraries like TensorFlow for creating face filters and Depth ai Luxonis for car navigation. I certainly saw the potential that AI has when it comes to object tracking and segmentation.

This time, I want to dig into this more, following up on the AI wave, which I consider just the tip of an iceberg.I chose to build the app with Unity, a popular game development platform that lately is used for building any sort of XR Product, because I would love to deploy the project on a device and making it accessible to multiple platforms. Unity comes very handy because it allows developers a cross platform deployment, for this reason it’s always been my first choice since I was making solo projects at school. I’ve always liked a reduced format that can easily be consumed with a minimal setup.

courtesy of Alessio Grancini

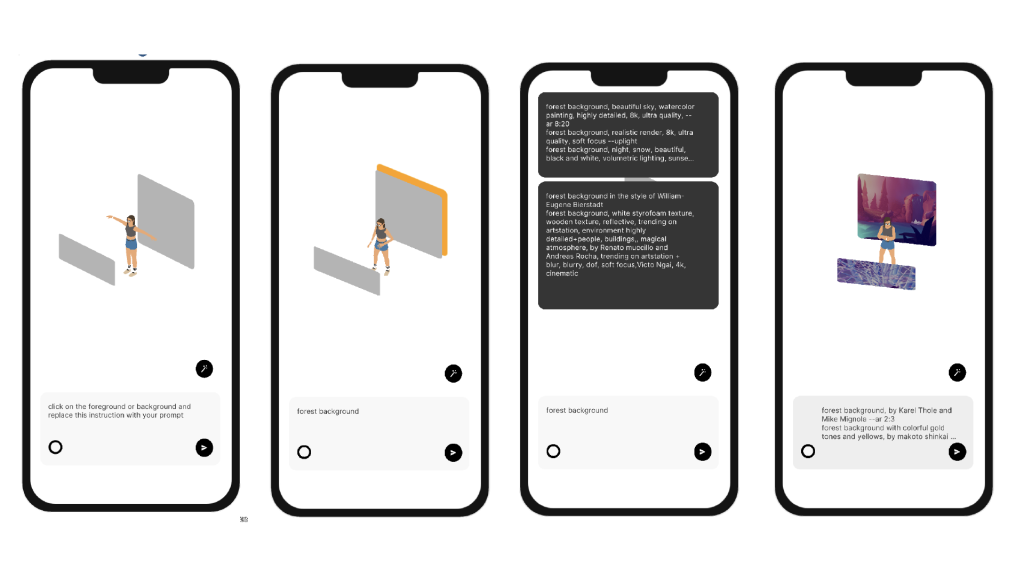

IOS app prototype

The app allows you to create a composite scene through a number of steps. Through this work, I tried to assemble a number of available open-source products to understand them and brainstorm what an app of the future could look like.

The app flow goes pretty much like the following steps

- Users enter the app

- Visualizes a 3-dimensional miniature scene

- Selects parts of the scene

- These specific steps would look at research as SAM

- Insert prompt to generate a texture for the selected parts

- The prompts are “augmented” with suggestions from language models

- The texture is generated

- Repeats the process again to create the scenes

- Brings the final composition to AR

Through this process, the user can have quite an exhaustive comprehension of the space that will be created since the app allows to go back and forth between screen representation and immersive experience for making changes.

Once the user is satisfied with the scene, the AR scene will inherit the information and will be launched, bringing the designed artifacts to real life scale.

In the AR scene, I tend to segment human objects for now. In fact, I deliberately used a segmentation model, which is a mask that avoids applying any post production effects on people. This app focuses exclusively on real time virtual production, but I feel like it is too soon to say what this could be, the more I iterate on it, the more I can see new development paths.

What could this be in the future?

The last aspect I’d like to explore was probably the most complex operation to accomplish. Looking at multiple approaches to AI cinematography, like Runway ML, I’ve seen how often the effect is applied to the full image, and I found it interesting to provide more flexibility to the user. The user must be able to “tap” on the reality they are observing to modify it in the most accurate way.

Want your dog to be a capybara? Now you can. (only in your app)

This concept could be applied to the full flow and in my view underlines a new emerging model of interactions. The masking allows the user to mix seamlessly reality with the backdrop generated from stable diffusion, and start to juxtapose whether or not digital and nondigital information with some logic that can be defined in a number of ways (alignment, colors, light etc.)

This prototype provides the user with a visual guideline for understanding the interface of the future, where natural actions and thoughts will be the catalyst for a symbiosis with external devices.

Additionally, it maintains enough features to extract something from it of value as a real time “pocketable” post production application.

While the app has practical applications in real-time post-production or content creation, its true value lies in the possibilities it represents. As we continue to explore and push the boundaries of this new type of interaction, we’ll see new and exciting applications emerge that we can’t even imagine today.

The future is exciting, and this prototype is just the beginning of something I still need to fully comprehend. It’s an invitation to imagine and explore the possibilities of this new way of interacting with the world around us.

I finally would like to credit Francesco Colonnese, who helped me to navigate the growing environment of AI and significantly contributed to the work that I will showcase.

* * *

Alessio will give a talk at the design conference Design Matters Tokyo 23, which will take place in Tokyo & Online, on Jun 2-3, 2023. The talk, titled “Everything interface”. Get your ticket here!

If you want to learn more about Alessio’n work and connect with him, head to LinkedIn, GitHub, Twitter, join him on Discord or visit his website.

Cover image: Alessio Grancini using DALL-E.